Not so Intelligent About Artificial Intelligence

AI governance continues to evolve at a dramatic pace. Earlier this month, US President Biden issued a comprehensive executive order, and UK Prime Minister Rishi Sunak hosted a summit to discuss AI safety, even interviewing Elon Musk. Meanwhile, Open AI has hit dramatic turbulence, and Europe is racing to update its own EU act.

But as governments race to catch up this time the problem is not the anti-science delusions of figures like Ron De Santis or Donald Trump. Instead, it is a heady mix of naïveté and cynicism that risks messing up one of the most important tasks that politics has to grapple with in the decades ahead.

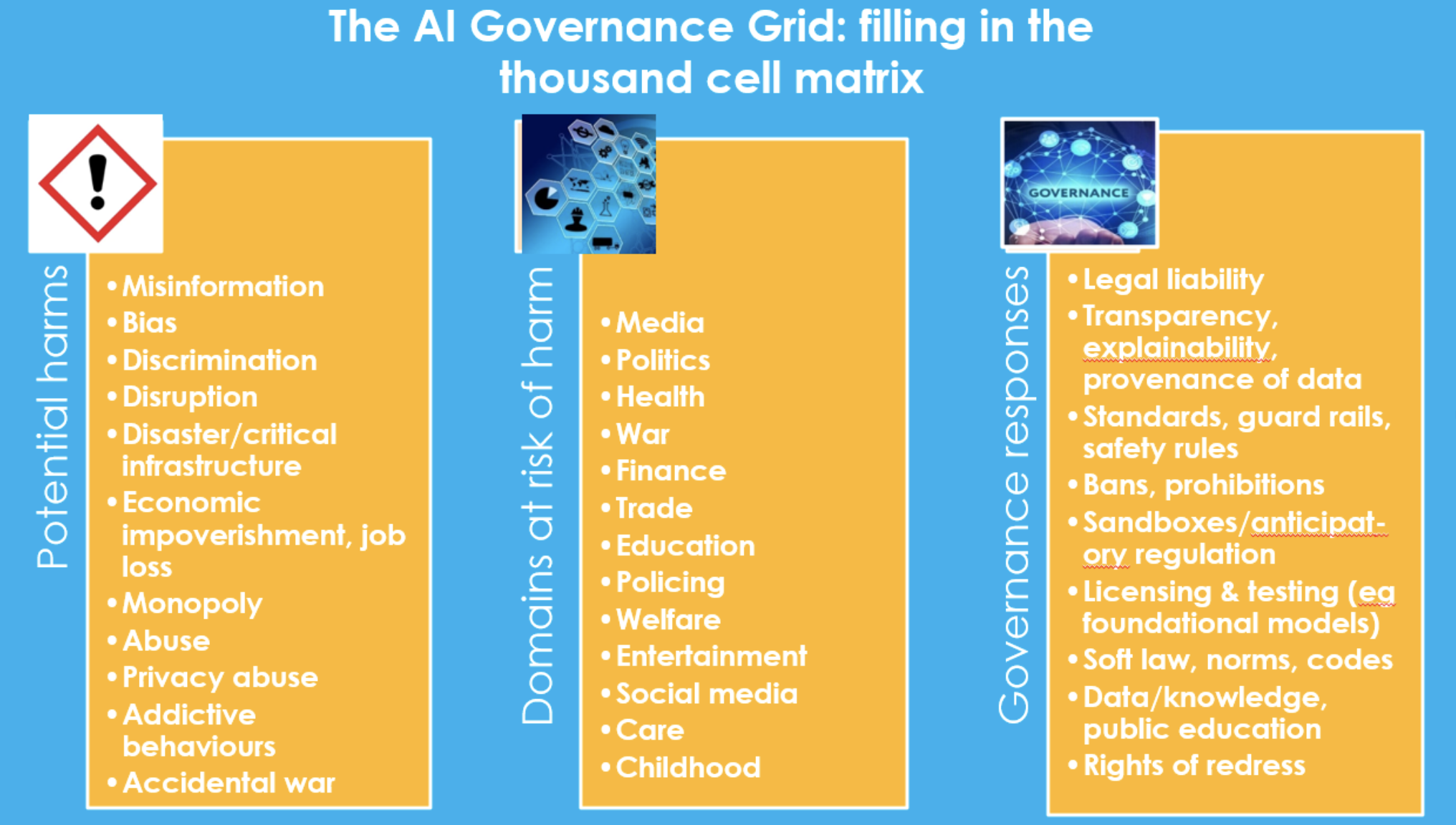

As I will show, the challenge of AI governance is like filling in a 1,000-cell matrix, full of complex details to handle specific harms, in specific sectors with specific responses. Yet most of the debate still assumes that generic solutions will be enough – like licenses for foundational models.

The challenge of AI governance is like filling in a 1,000-cell matrix, full of complex details to handle specific harms, in specific sectors with specific responses.

That this continues to be the tenor of the debate reveals just how badly stunted our capacities around technology governance continue to be.

Three big problems continue to repeat themselves. The first problem – exemplified by the UK government, but shared by many others – is to allow the agenda to be dominated by industry and not politics or the public interest: rather as if the oil industry had been asked to shape climate change policy or the big platforms had been asked to design regulations for the Internet. Governments were often so desperate to retain the support of the big firms that they relinquished their own roles – with Germany and France voting against EU regulation of large language models as just the latest example.

Throughout this year, the second problem has been the focus on fairly distant, doomsday risks while downplaying the many ways in which AI already affects daily life, from decisions on credit and debt to policing, not to mention the many scandals that have accompanied mistakes around AI in welfare and abundant evidence of bias and discrimination in fields like criminal justice. There is no doubt that the longer-term risks are important – but too often they have been talked up as a deliberate strategy to divert governments from action in the present.

Third, much of the debate continues to focus on the scientists, many very eminent. This looks at first glance quite enlightened, and some of the scientists, such as Stuart Russell, deserve much credit for filling the vacuum of government inaction. Unfortunately, however, scientists have not proven very good at governance. The pattern was formed back in 2015 when a windy declaration from figures like Demis Hassabis, the founder of DeepMind, Max Tegmark, Jaan Tallinn and Elon Musk committed to preparing for the risks that AI could bring and proposed “expanded research aimed at ensuring that increasingly capable AI systems are robust and beneficial: our AI systems must do what we want them to do.” Yet the declaration said nothing about how this might happen – nothing did.

Scientists have not proven very good at governance.

The pattern repeated itself this year when another open letter from 1,000 scientists called on “all AI labs to immediately pause for at least six months the training of AI systems more powerful than GPT-4.” But there was, once again, nothing on how this might be done. In the US, the public apparently supported such a pause (by a margin of roughly five to one). However, without a serious plan, there was little chance of it happening, and, once again, nothing did.

Since then, a flurry of equally windy statements have issued forth. There is, again, nothing wrong with this. But without competent institutions to implement these aspirations they are unlikely to materialize any time fast.

Without competent institutions to implement these aspirations, they are unlikely to materialize any time fast.

Here we get to the core of the problem. There are many good examples from history of science and politics collaborating to contain risks, often with business involved too: the various treaties and institutions designed to stop nuclear proliferation and the Montréal agreements on cutting CFCs are just two. In each case, the scientists and the politicians both understood the gravity of the threat and their own division of labour.

Around AI, however, the politicians have generally been culpably negligent, while the scientists have misjudged their ability to fill the gap, proving as poorly prepared to design governance arrangements as legislators would be to program algorithms.

So what needs to be done? First, at a global level, the long journey of institution-building needs to start right away. A minimum step is to create a Global AI Observatory, with parallels to the IPCC, to ensure a common body of analysis. I and others have shown how that this could work, tracking risks and likely technological developments, and providing a forum for global debate. For climate change, the IPCC provided the analysis – but the decisions on how to act on climate change had to be made by governments and politicians. A very similar division of labour will be needed for AI, and it is good that, at last, some in the industry have come around to this idea.

Second, at the national level, a new family of regulators will be needed. I first outlined how these might work eight years ago, often in collaboration with existing regulators. I never guessed that governments would take so long to get their act together. Across the EU, national governments are set to create some institutions as part of the implementation of their new AI law, and China has set up a powerful Cyberspace Administration. Yet in the UK, my home country, the government appears to still hope that existing regulators can do the job. Without competent institutions, we risk ever more well-intentioned statements willing the ends but not willing the means.

Third, we need much better training for politicians. The failure to handle AI is a symptom of a much bigger problem. Ever more of the issues politicians face involve science – from climate change and pandemics to AI and quantum. Yet they are about the only group exercising serious power, who get no training and no preparation. It is not surprising that they are easily manipulated, and that politicians who do not understand science or technology glibly promise to “follow the science” or to have an “innovation-led” approach to AI, without grasping what this means.

AI, in all of its many forms, has immense power for good and for harm. But in the decades ahead the shape of that governance will be quite different to that being talked about now. Many of the scientists talk as if generic licensing, or rules on safety, will be enough. This is quite wrong.

AI, in all of its many forms, has immense power for good and for harm. But in the decades ahead the shape of that governance will be quite different to that being talked about now. Many of the scientists talk as if generic licensing, or rules on safety, will be enough. This is quite wrong.

A better analogy is with the car. As the car became part of daily life in the 20th century, governments introduced hundreds of different rules: road-markings and speed limits, driving tests and emissions standards, speed bumps and seatbelts, drink-drive rules and countless other safety regulations. All were needed to ensure that we could get the benefits of the car without too many harms. Finance is another analogy. Governments do not regulate finance through generic principles, but rather through a complex array of rules covering everything from pensions to equity, insurance to savings, mortgages to crypto.

Much the same will be true of AI, which will need an even more complex range of rules, norms, prohibitions, in everything from democracy to education, finance to war, media to health.

Governments will have to steadily fill in what I call the ‘thousand cell matrix’, connecting potential risks to contexts and responses.

We have wasted the last decade, which should have been used to develop more sophisticated governance, as AI became part of daily life, from the courts to credit, our phones to our homes. Now, belatedly, the world is waking up. But in AI, as more generally, we will need much better hybrids of science and politics if wise decisions are to be made.

We have wasted the last decade, which should have been used to develop more sophisticated governance, as AI became part of daily life, from the courts to credit, our phones to our homes. Now, belatedly, the world is waking up. But in AI, as more generally, we will need much better hybrids of science and politics if wise decisions are to be made.

Geoff Mulgan’s new book, When Science Meets Power, is published this winter by Polity Press.